|

tanmayshankar [at] cmu [dot] edu

I'm a Research Scientist at the RAI Institute! I recently finished my Ph.D. at the Robotics Institute at Carnegie Mellon University, where I worked with Jean Oh. My Ph.D. research aimed to learn and translate temporal abstractions of behaviors (such as skills) across humans and robots. Here's a recording of my thesis defense for details, and here's a description of my research interests and philosophy!

I'm interested in solving the human-to-robot imitation learning problem, particularly by building temporal abstractions of behavior across both humans and robots. Much of my work adopts a representation learning perspective to this problem, borrowing ideas from unsupervised learning, machine translation, and probalistic inference. I strongly believe in such interdisciplinary research; for example, my past work has made connections between cross-domain imitation learning and unsupervised machine translation, between value iteration and neural network architectural components, and more. I believe much of my research is not only applicable to robots, but also to dextrous prosthetic hands. I am passionate about how I can explore prosthetics as an application domain. This stems from a broader interest in the potential for assistive and rehabilitational robotics, an area I have been passionate about since my undergrad.

Before my Ph.D., I was a research engineer at Facebook AI Research (FAIR), Pittsburgh for 2 years, where I worked on unsupervised skill learning for robots with Shubham Tulsiani and Abhinav Gupta. Before FAIR, I also did my Masters in Robotics from the Robotics Institute, working on differentiable imitation and reinforcement learning with Kris Kitani and Katharina Muelling. Here's more of my academic history.

In addition to working full time at FAIR, I returned to FAIR Pittsburgh during a summer of my Ph.D., working with Yixin Lin, Aravind Rajeswaran, Vikash Kumar, and Stuart Anderson on translating skills across humans and robots. Before my MS, I did my undergrad at IIT Guwahati, where I worked on reinforcement learning networks, with Prithwijit Guha and S. K. Dwivedy. Before I worked on robot learning, I used to work on assistive technology - an area I'm also passionate about. During my undergrad, I also spent summers working with Mykel Kochenderfer at the Stanford Intelligent Systems Lab, and with Howie Choset at the Biorobotics Lab at CMU. CV / Google Scholar / Github / LinkedIn |

|

Updates

[Nov '24] |

I started as a full-time Research Scientist at the RAI Institute! |

[Aug '24] |

I succesfully defended my thesis on August 29th! Here's a recording of my talk! |

[Jul '24] |

Our paper on TransAct was accepted to IROS 2024 as an Oral! Check out the paper here! and our results here! |

[Mar '24] |

Check out our real robot results on translating agent-environment interactions here! |

[Mar '24] |

Submitted our work on translating agent-environment interaction abstractions to IROS 2024! |

[Oct '23] |

I'm collaborating with the New Dexterity group from the University of Auckland on a new project! |

[Dec '22] |

Presented my work on learning agent-environment interaction abstractions at the workshop on aligning human-robot representations at CoRL 2022. |

[Nov '22] |

Successfully passed my Ph.D. thesis proposal! Here's a recording of my talk! |

Robot Learning Research |

|

Building on my previous skill learning work (ICML 2020) and translation work (ICML 2022), I developed TransAct, a framework to first learn abstract representations of agent-environment interactions, and then translate interactions with similar environmental effects across humans and robots. TransAct enabled zero-shot, in-domain transfer of complex, compositional task demonstrations from humans to robots. |

|

Inspired by the success of my work translating skills across human and robot arms, along with collaborators from the University of Auckland, I'm exploring whether we can apply equivalent strategies to translating EMG signals to control dextrous robot and prosthetic hands. |

|

Inspired by my previous work on representation learning for skill learning, and Peter Schaldenbrand's prior work on FRIDA the robot painter, together with Lawrence Chen, we are exploring whether building learnt representations of paint strokes would facilitate learning new types of paint strokes beyond ones the robot is preprogrammed with, to improve FRIDA's artistic expression. |

|

Inspired by the success of my work learning representations of robot skills, I'm exploring whether we can apply equivalent machinery to learning temporal abstractions of environment state. In particular, I hope to learn representations of patterns of motion of objects in the environment, or patterns of change of state. |

|

We developed an unsupervised approach to learn correspondences between skills across humans and various morphologically different robots, taking inspiration from unsupervised machine translation. Our approach is able to learn semantically meaningful orrespondences between skills across multiple robot-robot and human-robot domain pairs, despite being completely unsupervised. |

|

Inspired by the success of my work translating skills across human and robot arms, I'm exploring whether we can apply equivalent strategies to translating dextrous manipulation skills across human and robot hands. |

|

We presented an unsupervised approach to learn robot skills from demonstrations. We formulated a temporal variational inference, to learn robot skills from demonstrations in an entirely unsupervised manner, while also affording a learnt representation space of skills across a variety of robot and human characters. |

|

Learning robot skills from demonstrations using a temporal alignment loss to recompose demonstrations from skills.

|

|

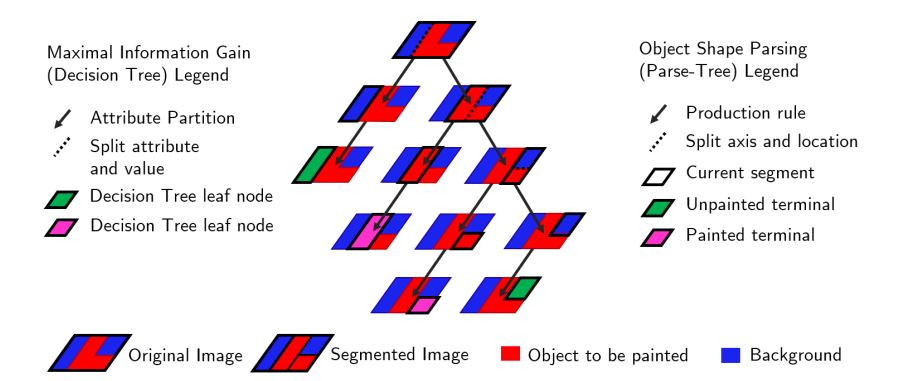

Learning image parsers by imitating ID3 style decision tree oracles, using differentiable variants of imitation learning.

|

|

Representing classical computations in Markov Decision Processes within architectures of Recurrent Convolutional Neural Networks.

|

Assistive Technology Research

|

Designing and prototyping a hybrid wheelchair exoskeleton for assisted mobility.

|

|

Designing and prototyping an assistive vision system for blind individuals.

|

Other Projects

|

Using hierarchical reinforcement learning to sequence predefined primitives.

|

|

Exploring how reinforcement learning networks can be applied to continuous quadrotor control.

|

|

Applying visual SLAM to quadrotors, to enable them with cooperative collision avoidance.

|

|

Using AR Tag based localization for an interior wing assembly platform.

|

|

Last updated: Jan 2023 |